We should start thinking of humans as actors in a world of software processes, rather than thinking of software processes as tools in a world of humans. I'll try to explain this "inside-out" view on Human-Computer Interaction (HCI).

Like roads and buildings, software applications are of course tools for humans. The highest goal of software is (almost) always to serve its humans users. Yet like roads and buildings, they exist in the larger context of an infrastructure. Thinking of one piece of software as a tool for one human has the risk of underprioritising the bigger picture of inter-app interop. The start and end of a road need to connect to other roads or destinations. Buildings need to play a role among the other buildings, squares, roads and car parks near them. And software applications need to interact with other pieces of software.

We can design a software application that helps a single user perform a task, like sending an email. The humans are fully in control, and emails are sent in human-readable formats.

But humans collaborate. Starting from activity theory, we can for instance take into account the recipient of the email, who is probably also a human, and who might be triggered to complete their own tasks in response, like editing a spreadsheet, submitting an expense, or scheduling a meeting.

And rather than focusing on making specific tasks easier, we can also design software applications from value-centered principles. This will help build a more reliantly coherent experience in the longer run, for instance by working on tools that, together, "connect the world" or "organize the world's information".

But whereas activity-centered and value-centered Human-Computer Interaction (HCI) design take into account a wider picture than the more focused view of task-centered HCI design, they are still modeling software applications as tools that humans use.

Current view: humans buying various incompatible tools and using them through HCI

Current view: humans buying various incompatible tools and using them through HCI

As a result of this "tools" view, as app developers we often consider UX as the highest goal of everything we build. And indeed, apps with bad UX suck. But I would argue UX is just a tiny cog in a bigger system. Even the user is a cog in a bigger system. And an app that has great UX but that acts as a data silo is like fast food: it feels good but it's not good for us.

We do of course think of data portability at times. For instance, consider two users, one using GitHub issues and another using Jira, who want to have the same view on the data, and have their intentions preserved during conflict resolution. Now, let's reconsider why we want to build that data sync feature.

One answer is: because it's good UX to both users, which will make them buy our software (business driven design). A slightly better answer is: because it's good UX and we want to build human-centric technology (user driven design). Or maybe even: because data portability is a core value in our engineering philosophy (value driven design).

But if you look at the system as a whole (Jira + GitHub + BridgeBot + 2 users) then the data entry interface is just one small component of that larger system.

And I think data entry through screens, keyboards and click/touch gestures will eventually lose its position as the dominant form of human-computer interaction (HCI). Maybe we should stop thinking of HCI as the most salient aspect of a computer system.

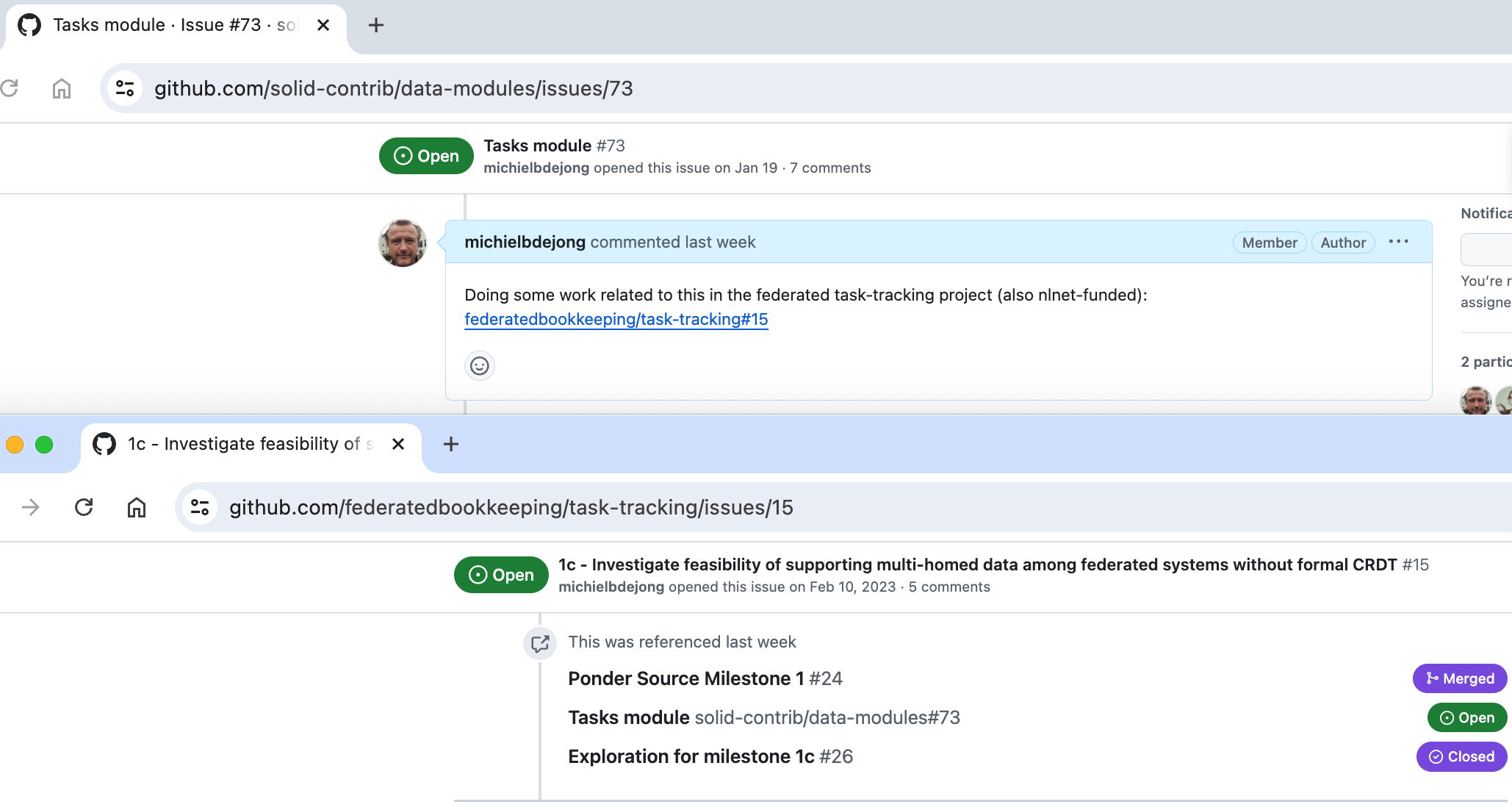

As another example of a product feature, consider the feature on GitHub issues where you can mention another issue in a text comment. This will become a hyperlink, and the other issue will get an event on its timeline with a backlink.

Mentioning another issue in an issue comment

Mentioning another issue in an issue comment

Great UX, right? I use it all the time. But if we start thinking of an issue tracker not as an isolated tool for humans but as a replica of the universal global tasks graph on the internet of data, then we might have designed this feature differently. As a user, I wasn't forced to pick a relationship type for this link between issues, and whereas the phrase "work related to this" is perfectly human-readable, it's not machine-readable.

Every time we humans enter free-form data into an application, a kitten dies. Free-form data entry makes data portability and process automation very hard. We'll say "yes, but these are just my own mental notes". But these notes contain data that could have been be used to trigger events elsewhere. It will often cause more data entry work down the line, where a human has to copy-paste that data into another user interface, which could have been avoided if we would have made the user articulate their intent in a machine-readable way.

In general, I think it's time we look at HCI inside-out: from the larger picture of "the internet of data" in which HCI encapsulates the user, rather than from the smaller picture of the user, in which HCI encapsulates one particular app. I have four reasons for saying that.

A better view: humans playing a role in the world of data, bubbled-up in HCI

A better view: humans playing a role in the world of data, bubbled-up in HCI

There is no single API to multi-homed data

First of all, if you look at "user-owned data across apps", there are automatically multiple HCI interfaces at play, at least one at each app. So there is no "the" user interface in a federated computer system. That means it can't be the central part of the system.

AI will help free us from tedious HCI

Second, AI will change the mode and frequency of human data entry. When a human has something to say to a computer system, it may in the future do that through voice control using full natural language sentences. Humans will spend less time and effort on just the act of HCI.

The one sentence "make arrangements for that conference" could replace hundreds of clicks that we currently have to do to book registration, accommodation, travel, and to arrange the payments, expense administration and invoice processing for it. And as HCI becomes more of an invisible commodity, with probably thousands of apps sharing a single "personal assistant" user interface, it will also become less of a unique selling point for apps.

Humans should not be a conduit for data portability

Third, a lot of data entry currently is due to the computer systems relying on humans to implement data portability. In the future, once we get data portability with on-the-fly schema migrations working, like what we're trying to achieve in the Federated Task Tracking project, humans will only need to enter data once (for instance at the hotel booking site) and it will automatically propagate to their calendar, payment processing system, and bookkeeping system, without the user needing to click on an email, download a PDF, click in their bookkeeping software, upload the PDF, and enter all the data that OCR got wrong.

All too often, the currently dominant view of apps as tools (to be individually useful for the user to buy) leaves data portability out of scope, and relies on humans copying or downloading from one apps' interface, and pasting or uploading to another app they hold.

Modeling the user as a system component

Fourth and finally, we also want to model the user's behaviour, in the context of the bigger system. Consider the computer system (the internet of data) as a liquid, and users floating in bubbles inside that liquid. So HCI is then the inside lining of that user bubble.

Now, to properly understand the way the human user fits into the bigger system, we need to model that bubble from the outside, including not only HCI but also a model of how the user will process the input it gets through the UX.

In the end, the user is a piece of (carbon-based) software that receives and outputs data events. To effectively model each user as yet another replica of a distributed data set, we should essentially turn the app inside out, not view it as a tool that humans can buy and hold, but as a way to wrap a human user into a bubble, UX/HCI on the inside, floating through the liquid data.

When trying to design computer systems that work well, we should also engineer the user component. Nudge the user to corectly codify their intent, so that the next component in the system will be able to understand and preserve that intent. Don't allow the user to output free text that is not machine-readable. This is a goal that becomes obvious once we adopt this new inside-out view of HCI for multi-homed data.

There is a whole stream of research into interfaces that reverse engineer the structured meaning of a human user's output, such as Ink & Switch PotLuck, which try to achieve both: extract machine-readable data from the user's brain, while still allowing them to write free form text.

And in local-first research, I think an understanding is arising that user interfaces should try to explicitly articulate the intent of a particular user gesture they capture, so that it can be propagated to other system components. Simply displaying an action's result back to the user is enough for the user, but not for the rest of the system.

With this inside-out view on human-computer interaction, I think we are better equipped to think about computer systems where data is free to move across multiple federated replicas, and where humans play an internal role, bubbled up with HCI.

HCI is not just about a smooth UX. It's about helping and nudging the user to be a better cog in a world of machine-readable data portability.

Many thanks to Michael Toomim who read an earlier version of this blog post and gave very valuable feedback!